2nd Piloting Cycle Open: ALL DIGITAL ACADEMY MOOCs for Adult Trainers on AI and IoT!

October 15, 2023

ADA WEBINAR: CyL Digital experience – Using AI tools with disabled learners (Rewatch)

October 25, 2023Authors: Riina Vuorikari and Stefano Kluzer

At the annual ALL DIGITAL Summit in Zagreb (AD Summit 2023), September 26-27 2023, the ADA project ran a 3-session workshop titled “Using DigComp for learning activities with AI tools at foundation, intermediate, and advanced level”.

Aims and motivations of the workshop

The aim of the workshop was to show how the DigComp framework helps design educational activities that use generative AI tools for digital content creation among other purposes. For this, Riina Vuorikari and Stefano Kluzer (authors of DigComp 2.2) presented a list of 27 knowledge, skills and attitudes (KSA) examples deemed specifically relevant for generative AI tools and their use in education.

The idea was to create activities with progressive difficulty, from simple tasks at the Foundation level to more complex ones at the Intermediate and Advanced levels (each level had its own session), reflecting DigComp’s proficiency levels. The workshop experience aimed to inform and inspire participants for the design of future educational activities for their students and learners.

Behind these aims was, in the first place, the awareness that during 2023 both interest and concerns about the use of generative AI tools in education have grown significantly. However, many educators have had few opportunities to experiment with them and to reflect and discuss openly together with experts, by referring to first-hand, practical experience rather than on ideological grounds. This led us to create an opportunity to use ChatGPT during the workshop and to plan for each session some introductory explanations about DigComp and prompt creation, followed by hands-on group work activities and a final sharing and discussion moment.

Another input to the ADA Workshop’s design was the perception that many educators still are not aware of the latest version of the framework, DigComp 2.2, especially its 70+ KSA examples referring to citizens’ interacting with AI systems. These were created as part of the update of the Framework’s dimension 4, which identifies KSA examples for all of DigComp’s 21 specific competences.

The approach to ChatGpT proposed in the workshop, drawn from the recent experience of the Open the Box project, is very similar to the one described by Mitch Resnick (Professor at MIT Media Lab and founder of the Scratch project) in a blog post on Medium, 24/04/2023: “Teachers and instructors are also using ChatGPT to support creative learning in their classes. I know someone who uses ChatGPT to refine discussion prompts for classes that she teaches, entering a draft prompt into ChatGPT to gain insights about the type of discussion it might generate. She treats her interactions with ChatGPT as a brainstorming session: posing different prompts to ChatGPT, seeing how it responds, and iteratively refining her prompts until she converges on a version that she feels could stimulate a rich conversation among the students.” In the ADA Workshop, we used the term ‘conversation’ rather than ‘brainstorming’, but the approaches are very similar.

Workshop organisers and participants

The ADA Workshop was designed collectively by AD experts Sandra Troia and Stefano Kluzer, DigComp and AI expert Riina Vuorikari (also AD Advisory Board member), Nicola Bruno, coordinator of Open the Box project at DataNinja, and Žarko Čižmar executive director of Croatian NGO Telecentar and organiser of the AD Summit 2023 in Zagreb.

The workshop targeted educators and trainers from secondary school to adult education, along with managers and designers of E&T organisations. Eventually, 37 of them joined the workshop: 13 attended all three sessions and the others attended 1 or 2 sessions.

A closer look at the workshop sessions

Sessions were designed to last 1,5 hours each, with three parts originally intended to last: Introduction 20 minutes, Group works 50 minutes, Feedback and discussion 20 minutes. As we shall see, the sessions’ lengths turned out a bit different.

Introduction part

As anticipated, each session started with a ‘theory’ session by Riina Vuorikari focusing on DigComp levels and what to keep in mind when designing learning activities at a given level. This part also included the presentation of the pre-selected KSA examples that illustrate the use of AI-driven tools for digital content creation. These examples were used also to write some simple learning outcomes.

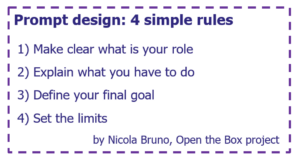

The next step was the presentation by Nicola Bruno of 4 simple rules for prompt design (see below), some suggestions about how to ‘converse’ with ChatGPT, and the assignment to be carried out during the group work activities in each session.

Finally, Sandra Troia presented the Padlets (one for each session) where group work participants could find some useful resources and were expected to share the results of their interaction with ChatGPT: Padlet Session 1, Padlet Session 2, and Padlet Session 3.

This part eventually lasted about 40 minutes in all sessions because, due to the participants’ turnover, all the presentations had to be given again each time.

Group work

The hands-on part of the sessions entailed creating small groups of participants (the ideal number would be 3-4 people per group), each of them with at least one person with a bit of experience in using ChatGPT. Some groups were the same throughout the three sessions, others formed each time with new people.

In Session 1, the assignment was “Ask ChatGPT to help you write some relevant content related to the use of Generative AI tools in education”. The ‘relevant content’ might be for instance a presentation. All groups were asked to work on this topic, differentiating the activity with respect to the age of learners and other aspects.

As envisaged by the DigComp Foundation level, the activity was simple and instructions were given in a step-by-step way to support learners/participants to start interacting with generative AI tools using prompts. Throughout the exercise, the idea was that learners gain autonomy to become more independent users of such tools. Also, the fact that there was only one computer per group forced learners to interact one with another, and through incidental peer learning, be guided in their tasks where needed.

The organisers pointed out a number of DigComp competences that the exercise touched upon (e.g. 1.1, 1.2, 2.1, 3.1) and some KSA examples (e.g. AI05, AI08, AI29, AI33, AI49, AI71, 23, 25, 28) that are suitable for foundation level learning activities. In theory, these examples could easily be used to write out learning outcomes at the foundation level; however, seeing that the participants were already struggling with the complexities of “conversing” with ChatGPT, they were not bothered with an additional task.

In Session 2, following DigComp’s view of digital competence proficiency, intermediate-level learning activities should focus on well-defined routine tasks for users to solve straightforward problems independently. An important aspect at this level is to plan activities that allow learners to use generative AI tools according to their own needs, so some freedom should be built into the learning assignment (e.g. participants chose topics and subject areas that they were usually teaching). Again, a number of KSA examples were pointed out to guide educators further in their planning work.

The group work assignment of Session 2 was “Use ChatGPT for designing a learning activity on a subject you are interested in”; the lesson should include a presentation and one individual or group activity and a few other limits/constraints should be taken into account.

In Session 3, the complexity of activities grew. At the Advanced level, the focus is on designing learning activities where learners have to apply their new skills to more complex non-routine tasks, and independently choose the most appropriate tools for the given task.

The group work assignment was “Use ChatGPT for designing a course plan on a subject you are interested in”. A simple template to write the course plan was provided and different variables were suggested to be introduced in the conversation with ChatGPT, such as overall course duration, length of lessons, number of students involved, available digital devices and connectivity etc. Importantly, the participants were also asked to reflect on writing intended learning outcomes using DigComp examples and to consider what kind of evaluation should be planned.

Group work lasted about 30-40 minutes depending on the sessions. Although more time would have definitely been useful, this duration proved sufficient for participants to start exploring a creative usage of ChatGP, produce enough results to be shared, and come up with reflections.

Feedback and discussion

When assignments were ready, the results of the interactions with ChatGPT were uploaded on a shared Padlet and a plenary-style feedback session started. This was an occasion for groups to share the prompts that they had created and how they eventually came up with an outcome that was satisfactory. Through this illustration, everyone saw diverse ways to use prompting, for example, to shorten the text, to refine it with citations, to better target it for the audience, etc.

Participants were also asked to share 3 take-aways providing reflections on the tasks done, the usefulness of the tool in general, and often also ethical considerations.

The feedback and discussion sessions would have benefited from more time as they were rich and lively, lasting from 15 to 30 minutes (in Session 2 the end of the session was actually delayed).

Conclusions

The workshop was very much appreciated by the participants and we agreed to meet again (this time online) in early December 2023 to share any experiences, findings, and problems stemming from putting into practice what was learned in Zagreb.

With respect to the workshop’s initial aims, we could test the validity of the list of KSA examples selected from DigComp 2.2 that we felt are relevant for educational activities related to generative AI. Since the KSA examples in DigComp 2.2 do not refer to any specific AI applications (reflecting DigComp’s technology neutrality), it was possible to use them in a more contextualized perspective such as was the case in the workshop with ChatGPT. Secondly, it was illustrated how the KSA examples could be used as a basis to define the learning outcomes of an educational activity. Last, it was discussed that adapting existing KSA examples to create new items that explicitly focus on generative AI would be a useful support for educators.

For instance, referring again to Mitch Resnick’s blog post mentioned earlier where he talks about the new skills people will have to learn to use generative AI systems effectively, one might envisage a new Knowledge example (a variant of AI29 in DigComp 2.2, Annex 2) “Knows the best strategies for writing prompts or questions for generative AI systems”, and related to it a new Skill example “Knows how to ask follow-up questions to iteratively refine the responses from generative AI systems”.

The workshop’s group work assignments also allowed us to confirm that the ‘complexity of tasks’ is a relevant aspect for proficiency progression in this as in other domains, and is reflected not so much in mastering technical functionality (writing the prompts for ChatGPT is technically simple), but rather in the capacity to run articulate, sequentially coherent and effective (to the purpose) ‘conversations’ with the AI-based system. This capacity, in turn, seems to depend on two factors. On the one hand, cognitive (e.g. making sense of the system’s replies and understanding why they might differ from what was intended/expected) and linguistic abilities (e.g. writing the ‘right’ question, ‘playing’ with the language to obtain better results) which are probably partly specific for the different generative-AI system. On the other hand, and in a significant way, what we might define as disciplinary or content-related knowledge is also essential to assess the quality and usefulness of the AI-system replies.